Overview

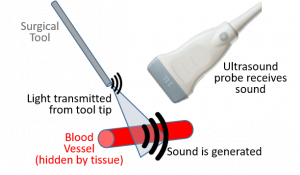

The PULSE Lab engages in a wide range of cutting-edge research projects to develop novel ultrasound and photoacoustic imaging system components for diagnostic and surgical applications. While ultrasound imaging requires the transmission and reception of sound, the photoacoustic imaging process utilizes both light and sound to make images by transmitting laser pulses that illuminate regions of interest, which subsequently absorb the light, causing thermal expansion and the generation of sound waves that are detected with conventional ultrasound transducers. As a result, ultrasound images display differences in acoustic impedance, while photoacoustic images display differences in optical absorption. When using the same ultrasound transducer for sound detection, ultrasound and photoacoustic images can be inherently co-registered to each other, enabling ultrasound imaging to provide the structural information needed to put photoacoustic images in anatomical context. As an example, our novel approach to photoacoustic-guided surgery is illustrated here, where specialized light delivery systems are custom designed in our lab to attach to specific surgical tools, with the goal of helping surgeons visualize major blood vessels that are hidden during surgery. Accidental injury to these vessels often leads to major surgical complications, including patient death. We develop three classes of innovative imaging systems: (1) photoacoustic imaging systems that will improve navigation during surgery, (2) ultrasound imaging systems that will improve diagnostic and surgical outcomes, and (3) robotic systems that will improve ultrasound and photoacoustic imaging procedures. We have four core research areas to support our overall goal of developing innovative ultrasound and photoacoustic imaging systems:

The PULSE Lab engages in a wide range of cutting-edge research projects to develop novel ultrasound and photoacoustic imaging system components for diagnostic and surgical applications. While ultrasound imaging requires the transmission and reception of sound, the photoacoustic imaging process utilizes both light and sound to make images by transmitting laser pulses that illuminate regions of interest, which subsequently absorb the light, causing thermal expansion and the generation of sound waves that are detected with conventional ultrasound transducers. As a result, ultrasound images display differences in acoustic impedance, while photoacoustic images display differences in optical absorption. When using the same ultrasound transducer for sound detection, ultrasound and photoacoustic images can be inherently co-registered to each other, enabling ultrasound imaging to provide the structural information needed to put photoacoustic images in anatomical context. As an example, our novel approach to photoacoustic-guided surgery is illustrated here, where specialized light delivery systems are custom designed in our lab to attach to specific surgical tools, with the goal of helping surgeons visualize major blood vessels that are hidden during surgery. Accidental injury to these vessels often leads to major surgical complications, including patient death. We develop three classes of innovative imaging systems: (1) photoacoustic imaging systems that will improve navigation during surgery, (2) ultrasound imaging systems that will improve diagnostic and surgical outcomes, and (3) robotic systems that will improve ultrasound and photoacoustic imaging procedures. We have four core research areas to support our overall goal of developing innovative ultrasound and photoacoustic imaging systems:

- Light Delivery Systems for Intraoperative Photoacoustics

- Laser-Tissue Interactions

- Robot-Assisted Imaging

- Advanced Beamforming Methods (including our novel Deep Learning Applications)

Eight of our clinical applications are listed here and featured below:

- Neurosurgery Navigation with Intraoperative Photoacoustics

- Photoacoustic-Guided Hysterectomy Performed with a da Vinci Surgical Robot

- Photoacoustic Imaging for Spine Surgery

- Coherence-Based Breast Imaging

- Enhancing Needle Visualization and Navigation in Challenging Acoustic Environments

- Reducing Acoustic Clutter in Echocardiography with SLSC Beamforming

- Force-Controlled Robot to Standardize Tissue Elasticity Measurements

- Photoacoustic Imaging of Prostate Brachytherapy Seeds

Additional clinical applications that are not featured on this page are available by searching our Publications page.

Datasets and code related to our research are available on our Software page.

Check out this Invited Perspective to learn more about our conceptualization of photoacoustic-guided surgery:

- Bell MAL, Photoacoustic imaging for surgical guidance: Principles, applications, and outlook, Journal of Applied Physics, 128(6):060904, 2020 [pdf]

*According to the journal’s website: “Perspective articles present an expert viewpoint on topics currently generating a lot of interest in the research community. While perspectives generally provide a brief overview of the topic, their main purpose is to provide a forward looking view on where progress in a particular research area is heading.”

General Research Areas

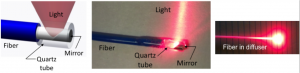

Light Delivery Systems for Intraoperative Photoacoustics

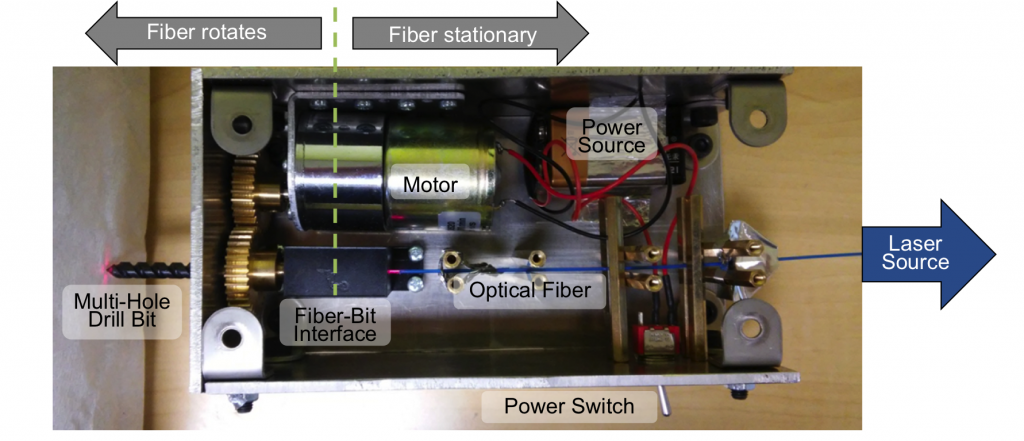

Photoacoustic imaging has traditionally been performed by either integrating the light source with the ultrasound transducer or maintaining a fixed distance between the light source and acoustic receiver. Our laboratory takes an unconventional approach to photoacoustic system designs by flexibly separating the optics from the acoustics and attaching optical fibers to surgical tools. We are developing novel light delivery systems based on this approach for multiple clinical applications.

Photoacoustic imaging has traditionally been performed by either integrating the light source with the ultrasound transducer or maintaining a fixed distance between the light source and acoustic receiver. Our laboratory takes an unconventional approach to photoacoustic system designs by flexibly separating the optics from the acoustics and attaching optical fibers to surgical tools. We are developing novel light delivery systems based on this approach for multiple clinical applications.

Drill Designs

- Eddins B and Bell MAL, Design of a multifiber light delivery system for photoacoustic-guided surgery, Journal of Biomedical Optics, 22(4),041011, 2017 [pdf] [featured in SPIE Newsroom]

- Shubert J and Bell MAL, A novel drill design for photoacoustic guided surgeries, Proceedings of SPIE Photonics West, San Francisco, CA, January 28-31, 2018 [pdf]

Internal & Interstitial Illumination

- Bell MAL, Guo X, Song DY, Boctor EM. Transurethral light delivery for prostate photoacoustic imaging, Journal of Biomedical Optics, 20(3):036002, 2015. [full text]

- Bell MAL, Kuo N, Song DY, Kang J, Boctor EM. In vivo visualization of prostate brachytherapy seeds with photoacoustic imaging, Journal of Biomedical Optics, 19(12):126011, 2014. [full text]

Laser-Tissue Interactions

By taking an unconventional approach to photoacoustic imaging and separating the optics from the acoustics, the laser safety limits for interventional photoacoustics (with the laser in direct contact with tissues other than skin and eyes) are poorly defined. Our laboratory is focused on providing the scientific body of knowledge required to define safety limits for multiple tissue types through Monte Carlo simulations of light propagation and experimental testing. The animation shows a 3D Monte Carlo simulation of the normalized fluence distribution in prostate tissue implanted with three brachytherapy seeds.

By taking an unconventional approach to photoacoustic imaging and separating the optics from the acoustics, the laser safety limits for interventional photoacoustics (with the laser in direct contact with tissues other than skin and eyes) are poorly defined. Our laboratory is focused on providing the scientific body of knowledge required to define safety limits for multiple tissue types through Monte Carlo simulations of light propagation and experimental testing. The animation shows a 3D Monte Carlo simulation of the normalized fluence distribution in prostate tissue implanted with three brachytherapy seeds.

Related Publications:

- Huang J, Wiacek A,Kempski KM, Palmer T, Izzi J, Beck S, Bell MAL, Empirical Assessment of Laser Safety for Photoacoustic-Guided Liver Surgeries, Biomedical Optics Express, 12, 1205-1216, 2021 [pdf]

- Bell MAL, Guo X, Song DY, Boctor EM. Transurethral light delivery for prostate photoacoustic imaging, Journal of Biomedical Optics, 20(3):036002, 2015. [full text]

- Bell MAL, Dagle AB, Kazanzides P, Boctor EM. Experimental Assessment of Energy Requirements and Tool Tip Visibility for Photoacoustic-Guided Endonasal Surgery, Proceedings of SPIE Photonics West, San Francisco, CA, February 13-17, 2016. [full text]

This work is funded by NSF CAREER Award ECCS 1751522.

Robot-Assisted Imaging

Imaging tasks are often challenged by limited human motor control and perception. We incorporate robotic principles in our imaging system designs to overcome these challenges. For example, with cooperative robotic control, the user and the robot share control of the ultrasound probe, thereby enabling similar maneuverability to a free-hand approach. Although the end effector is shown as an ultrasound probe, this and other control laws (e.g., autonomous control, teleoperative control) can be applied to the probe, optical fiber, and/or surgical tools.

Imaging tasks are often challenged by limited human motor control and perception. We incorporate robotic principles in our imaging system designs to overcome these challenges. For example, with cooperative robotic control, the user and the robot share control of the ultrasound probe, thereby enabling similar maneuverability to a free-hand approach. Although the end effector is shown as an ultrasound probe, this and other control laws (e.g., autonomous control, teleoperative control) can be applied to the probe, optical fiber, and/or surgical tools.

Robotic Photoacoustic Applications

- Gubbi MR, Bell MAL, Deep Learning-Based Photoacoustic Visual Servoing: Using Outputs from Raw Sensor Data as Inputs to a Robot Controller, IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, May 30 – June 5, 2021 [pdf]

- Graham M, Assis F, Allman D, Wiacek A, González E, Gubbi M, Dong J, Hou H, Beck S, Chrispin J, Bell MAL, In vivo demonstration of photoacoustic image guidance and robotic visual servoing for cardiac catheter-based interventions, IEEE Transactions on Medical Imaging, 39(4):1015-1029, 2020 [pdf]

- González E, Bell MAL, GPU implementation of photoacoustic short-lag spatial coherence imaging for improved image-guided interventions, Journal of Biomedical Optics, 25(7):077002, 2020 [pdf]

- Bell MAL, Shubert J, Photoacoustic-based visual servoing of a needle tip, Scientific Reports, 8:15519, 2018 [pdf]

- Shubert J, Bell MAL, Photoacoustic Based Visual Servoing of Needle Tips to Improve Biopsy on Obese Patients, Proceedings of the 2017 IEEE International Ultrasonics Symposium, Washington, DC, September 6-9, 2017 [pdf]

- Gandhi N, Allard M, Kim S, Kazanzides P, Bell MAL, Photoacoustic-based approach to surgical guidance performed with and without a da Vinci robot, Journal of Biomedical Optics, 22(12), 121606, 2017 [pdf]

This work is partially funded by NSF CAREER Award ECCS 1751522 and NSF SCH Award IIS 2014088.

Robotic Ultrasound Applications

- Bell MAL, Kumar S, Kuo L, Sen HT, Iordachita I, Kazanzides P. Toward standardized ultrasound-based elasticity measurements with robotic force control, IEEE Transactions on Biomedical Engineering, 63(7):1517-24. 2016. [pdf]

- Bell MAL, Sen HT, Iordachita I, Kazanzides P, Wong J. In vivo reproducibility of robotic probe placement for a novel ultrasound-guided radiation therapy system, Journal of Medical Imaging, 1(2):025001, 2014. [full text]

- Sen HT, Bell MAL, Zhang Y, Ding K, Boctor EM, Wong J, Iordachita I, Kazanzides P, System integration and in-vivo testing of a robot for ultrasound guidance and monitoring during radiotherapy, IEEE Transactions on Biomedical Engineering, 64(7):1608-1618, 2017 [pdf]

- Sen HT, Bell MAL, Iordachita I, Wong J, Kazanzides P. A Cooperatively Controlled Robot for Ultrasound Monitoring of Radiation Therapy,Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokoyo, Japan, November 3-8, 2013. [full text]

Advanced Beamforming Methods

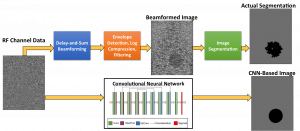

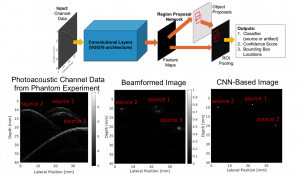

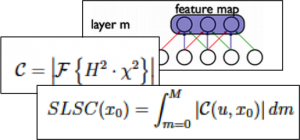

Accurate diagnoses and precise image-guided procedures hinge on medical imaging systems that provide outstanding image quality. In ultrasound and photoacoustic imaging, the beamforming process is typically the first line of software defense against poor quality images. We are developing advanced beamformers and signal processing techniques to improve image quality. For example, our group is the first to demonstrate that deep learning can be used to bypass all traditional beamforming steps (including time delays) and use raw channel data to directly display specific features of interest in ultrasound and photoacoustic images. Our related publications in this area are listed below.

Accurate diagnoses and precise image-guided procedures hinge on medical imaging systems that provide outstanding image quality. In ultrasound and photoacoustic imaging, the beamforming process is typically the first line of software defense against poor quality images. We are developing advanced beamformers and signal processing techniques to improve image quality. For example, our group is the first to demonstrate that deep learning can be used to bypass all traditional beamforming steps (including time delays) and use raw channel data to directly display specific features of interest in ultrasound and photoacoustic images. Our related publications in this area are listed below.

Deep Learning Ultrasound Applications

- Hyun D, Wiacek A, Goudarzi S, Rothlübbers S, Asif A, Eickel K, Eldar YC, Huang J, Mischi M, Rivaz H, Sinden D, R.J.G. van Sloun, H. Strohm, Bell MAL, Deep Learning for Ultrasound Image Formation: CUBDL Evaluation Framework & Open Datasets, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 68(12):3466-3483, 2021 [pdf | datasets | code]

- Nair AA, Gubbi M, Tran TD, Reiter A, Bell MAL, A Generative Adversarial Neural Network for Beamforming Ultrasound Images: Invited Presentation, 53rd Annual Conference on Information Sciences and Systems, Baltimore, MD, March 20-22, 2019 [pdf]

- Nair AA, Tran T, Reiter A, Bell MAL, A deep learning based alternative to beamforming ultrasound images, IEEE International Conference on Acoustics, Speech and Signal Processing, Calgary, Alberta, Canada, April 15-20, 2018 [pdf]

- Nair AA, Tran T, Bell MAL, A fully convolutional neural network for beamforming ultrasound images, Proceedings of the 2018 IEEE International Ultrasonics Symposium, Kobe, Japan, October 22-25, 2018 [pdf]

- Wiacek A, González E, Bell MAL, CohereNet: A Deep Learning Architecture for Ultrasound Spatial Correlation Estimation and Coherence-Based Beamforming, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 67(12):2574-2583, 2020 [featured on journal cover] [pdf]

- Nair AA, Washington K, Tran T, Reiter A, Bell MAL, Deep learning to obtain simultaneous ultrasound image and segmentation outputs from a single input of raw channel data, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 67(12):2493-2509, 2020 [pdf]

This work is funded by NIH Trailblazer Award R21-EB025621.

Deep Learning Photoacoustic Applications

- Allman D, Assis F, Chrispin J, Bell MAL, Deep Learning to Detect Catheter Tips in Vivo During Photoacoustic-Guided Catheter Interventions: Invited Presentation, 53rd Annual Conference on Information Sciences and Systems, Baltimore, MD, March 20-22, 2019 [pdf]

- Allman D, Reiter A, Bell MAL, Photoacoustic source detection and reflection artifact removal enabled by deep learning, IEEE Transactions on Medical Imaging, 37(6):1464-1477, 2018 [pdf | datasets | code]

- Allman D, Assis F, Chrispin J, Bell MAL, Deep neural networks to remove photoacoustic reflection artifacts in ex vivo and in vivo tissue, Proceedings of the 2018 IEEE International Ultrasonics Symposium, Kobe, Japan, October 22-25, 2018 [pdf]

- Allman D, Reiter A, Bell MAL, Exploring the effects of transducer models when training convolutional neural networks to eliminate reflection artifacts in experimental photoacoustic images, Proceedings of SPIE Photonics West, San Francisco, CA, January 28-31, 2018 [pdf]

- Allman D, Reiter A, Bell MAL, A Machine Learning Method to Identify and Remove Reflection Artifacts in Photoacoustic Channel Data, Proceedings of the 2017 IEEE International Ultrasonics Symposium, Washington, DC, September 6-9, 2017 [pdf]

- Reiter A and Bell MAL, A machine learning approach to detect point sources in photoacoustic data, Proceedings of SPIE Photonics West, San Francisco, CA, January 28 – February 2, 2017 [pdf]

Coherence-Based Photoacoustic Beamforming

- Graham MT, Bell MAL, Photoacoustic Spatial Coherence Theory and Applications to Coherence-Based Image Contrast and Resolution, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 67(10):2069-2084, 2020 [pdf]

- González E, Bell MAL, GPU implementation of photoacoustic short-lag spatial coherence imaging for improved image-guided interventions, Journal of Biomedical Optics, 25(7):077002, 2020 [pdf]

- Stephanian B, Graham MT, Hou H, Bell MAL, Additive noise models for photoacoustic spatial coherence theory, Biomedical Optics Express,9(11):5566-5582, 2018 [pdf]

- Graham MT, Bell MAL, Theoretical Application of Short-Lag Spatial Coherence to Photoacoustic Imaging, Proceedings of the 2017 IEEE International Ultrasonics Symposium, Washington, DC, September 6-9, 2017 [pdf]

- Bell MAL, Kuo N, Song DY, Boctor EM. Short-lag spatial coherence beamforming of photoacoustic images for enhanced visualization of prostate brachytherapy seeds, Biomedical Optics Express, 4(10): 1964-77. 2013. [full text]

This work is partially funded by NSF CAREER Award ECCS 1751522.

Coherence-Based Ultrasound Beamforming

-

- Wiacek A, Oluyemi E, Myers K, Mullen L, Bell MAL, Coherence-based beamforming increases the diagnostic certainty of distinguishing fluid from solid masses in breast ultrasound exams, Ultrasound in Medicine and Biology, 46(6):1380-1394, 2020 [pdf]

- A Wiacek, OMH Rindal, E Falomo, K Myers, K Fabrega-Foster, S Harvey, MAL Bell, Robust Short-Lag Spatial Coherence Imaging of Breast Ultrasound Data: Initial Clinical Results, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 66(3):527-540, 2019 [pdf]

- AA Nair, T Tran, MAL Bell, Robust Short-Lag Spatial Coherence Imaging, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 65(3):366-377, 2018 [pdf]

- Lediju MA, Trahey GE, Byram BC, Dahl JJ. Spatial coherence of backscattered echoes: Imaging characteristics, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 58(7):1377-88. 2011. [full text]

- Bell MAL, Goswami R, Kisslo JA, Dahl JJ, Trahey GE. Short-lag spatial coherence (SLSC) imaging of cardiac ultrasound data: Initial clinical results, Ultrasound in Medicine and Biology, 39(10):1861–74. 2013. [abstract | full text]

This work is partially funded by NIH R01 EB032960.

Clinical Applications

Neurosurgery Navigation with Intraoperative Photoacoustics

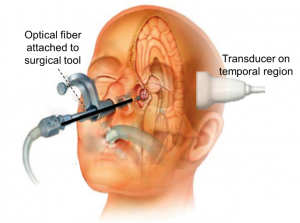

Endonasal transsphenoidal surgery, an effective approach for pituitary tumor resection, poses the serious risk of internal carotid artery injury, which causes many severe complications, including patient death. This injury occurs because the current endoscopic approach is limited to visualization of superficial anatomy. We are developing the imaging technology needed visualize the carotid arteries hidden by bone and surrounding tissue and thereby eliminate the risk of injury. The concept for this intraoperative photoacoustic imaging technology includes an optical fiber attached to a surgical tool and a transcranial ultrasound probe placed on the temple, as demonstrated in the picture.

Endonasal transsphenoidal surgery, an effective approach for pituitary tumor resection, poses the serious risk of internal carotid artery injury, which causes many severe complications, including patient death. This injury occurs because the current endoscopic approach is limited to visualization of superficial anatomy. We are developing the imaging technology needed visualize the carotid arteries hidden by bone and surrounding tissue and thereby eliminate the risk of injury. The concept for this intraoperative photoacoustic imaging technology includes an optical fiber attached to a surgical tool and a transcranial ultrasound probe placed on the temple, as demonstrated in the picture.

Related Publications:

- Graham MT, Dunne R, Bell MAL, Comparison of compressional and elastic wave simulations for patient-specific planning prior to transcranial photoacoustic-guided neurosurgery, Journal of Biomedical Optics, 26(7):076006, 2021 [featured on journal cover] [pdf]

- Graham MT, Huang J, Creighton F, Bell MAL, Simulations and human cadaver head studies to identify optimal acoustic receiver locations for minimally invasive photoacoustic-guided neurosurgery, Photoacoustics, 19:100183, 2020 [pdf]

- Bell MAL, Ostrowski AK, Li K, Kazanzides P, Boctor EM. Localization of Transcranial Targets for Photoacoustic-Guided Endonasal Surgeries, Photoacoustics, 3(2):78-87, 2015. [full text]

- Bell MAL, Ostrowski AK, Li K, Kazanzides P, Boctor EM. Quantifying bone thickness, light transmission, and contrast interrelationships in transcranial photoacoustic imaging, Proceedings of SPIE Photonics West, San Francisco, CA, February 7-12, 2015. [full text]

- Bell MAL, Ostrowski AK, Kazanzides P, Boctor EM. Feasibility of transcranial photoacoustic imaging for interventional guidance of endonasal surgeries, Proceedings of SPIE Photonics West, San Francisco, CA, February 1-6, 2014. [full text]

- Bell MAL, Dagle AB, Kazanzides P, Boctor EM. Experimental Assessment of Energy Requirements and Tool Tip Visibility for Photoacoustic-Guided Endonasal Surgery, Proceedings of SPIE Photonics West, San Francisco, CA, February 13-17, 2016. [full text]

- Kim S, Tan Y, Kazanzides P, Bell MAL. Feasibility of photoacoustic image guidance for telerobotic endonasal transsphenoidal surgery, Proceedings of the 2016 IEEE International Conference on Biomedical Robotics and Biomechatronics, University Town, Singapore, June 26-29, 2016 [full text]

This project was funded by NIH Grants R00-EB018994 and K99-EB018994.

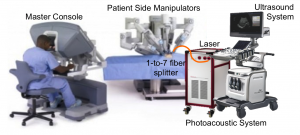

Photoacoustic-Guided Hysterectomy Performed with a da Vinci Surgical Robot

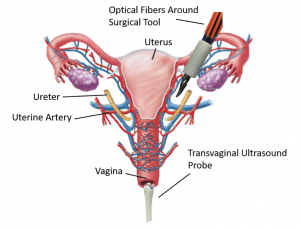

Approximately 600,000 hysterectomies are performed each year in the US to remove the uterus. This surgery requires cauterization and clipping of the uterine arteries, located close to and overlapping the ureter, which can cause accidental injury to the ureter. We are developing the imaging technology needed visualize the uterine arteries and the nearby ureter, which are both hidden by surrounding tissue. Our goal is to eliminate the risk of accidental ureteral injury during robotic hysterectomies performed with the da Vinci surgical robot. The concept for this intraoperative photoacoustic imaging technology includes optical fibers that surround a da Vinci surgical tool and a transvaginal ultrasound probe placed to receive the resulting sound waves, as demonstrated in the picture.

Approximately 600,000 hysterectomies are performed each year in the US to remove the uterus. This surgery requires cauterization and clipping of the uterine arteries, located close to and overlapping the ureter, which can cause accidental injury to the ureter. We are developing the imaging technology needed visualize the uterine arteries and the nearby ureter, which are both hidden by surrounding tissue. Our goal is to eliminate the risk of accidental ureteral injury during robotic hysterectomies performed with the da Vinci surgical robot. The concept for this intraoperative photoacoustic imaging technology includes optical fibers that surround a da Vinci surgical tool and a transvaginal ultrasound probe placed to receive the resulting sound waves, as demonstrated in the picture.

Related Publications:

- Wiacek A, Wang KC, Wu H, Bell MAL, Photoacoustic-guided laparoscopic and open hysterectomy procedures demonstrated with human cadavers, IEEE Transactions on Medical Imaging, 40(12):3279-3292, 2021 [pdf]

- Wiacek A, Wang K, Bell MAL, Techniques to distinguish the ureter from the uterine artery in photoacoustic-guided hysterectomies, Proceedings of SPIE Photonics West, San Francisco, CA, February 2-7, 2019 [pdf]

- Allard M, Shubert J, Bell MAL, Feasibility of photoacoustic guided teleoperated hysterectomies, Journal of Medical Imaging: Special Issue on Image-Guided Procedures, Robotic Interventions, and Modeling, 5(2), 021213, 2018 [pdf] [featured in Health Data Management News]

- Gandhi N, Allard M, Kim S, Kazanzides P, Bell MAL, Photoacoustic-based approach to surgical guidance performed with and without a da Vinci robot, Journal of Biomedical Optics, 22(12), 121606, 2017 [pdf] [featured in BioOptics World]

This project is funded by NIH R01 EB032358 and was initially funded by a JHU Discovery Award

Photoacoustic Imaging for Spine Surgery

It is well known that there are structural differences between cortical and cancellous bone. However, spine surgeons currently have no reliable method to non-invasively determine these differences in real-time when choosing the optimal starting point and trajectory to insert pedicle screws and avoid surgical complications associated with breached or weakened bone. We are exploring 3D photoacoustic imaging of a human vertebra to noninvasively differentiate cortical from cancellous bone for this surgical task. We observed that signals from the cortical bone tend to appear as compact, high-amplitude signals, while signals from the cancellous bone have lower amplitudes and are more diffuse. In addition, we discovered that the location of the light source for photoacoustic imaging is a critical parameter that can be adjusted to non-invasively determine the optimal entry point into the pedicle. Our results are promising for the introduction and development of photoacoustic imaging systems to overcome a wide range of longstanding challenges with spinal surgeries, including challenges with the occurrence of bone breaches due to misplaced pedicle screws.

It is well known that there are structural differences between cortical and cancellous bone. However, spine surgeons currently have no reliable method to non-invasively determine these differences in real-time when choosing the optimal starting point and trajectory to insert pedicle screws and avoid surgical complications associated with breached or weakened bone. We are exploring 3D photoacoustic imaging of a human vertebra to noninvasively differentiate cortical from cancellous bone for this surgical task. We observed that signals from the cortical bone tend to appear as compact, high-amplitude signals, while signals from the cancellous bone have lower amplitudes and are more diffuse. In addition, we discovered that the location of the light source for photoacoustic imaging is a critical parameter that can be adjusted to non-invasively determine the optimal entry point into the pedicle. Our results are promising for the introduction and development of photoacoustic imaging systems to overcome a wide range of longstanding challenges with spinal surgeries, including challenges with the occurrence of bone breaches due to misplaced pedicle screws.

Related publications:

- González E, Jain A, Bell MAL, Combined ultrasound and photoacoustic image guidance of spinal pedicle cannulation demonstrated with intact ex vivo specimens, IEEE Transactions on Biomedical Engineering, 68(8):2479-2489, 2021 [pdf]

- Shubert J, Bell MAL, Photoacoustic imaging of a human vertebra: Implications for guiding spinal fusion surgeries, Physics in Medicine and Biology, 63(14), 144001, 2018 [pdf]

- Shubert J, Bell MAL, A novel drill design for photoacoustic guided surgeries, Proceedings of SPIE Photonics West, San Francisco, CA, January 28-31, 2018 [pdf]

- González E, Wiacek A, Bell MAL, Visualization of custom drill bit tips in a human vertebra for photoacoustic-guided spinal fusion surgeries, Proceedings of SPIE Photonics West, San Francisco, CA, February 2-7, 2019 [pdf]

This project was funded in part by a sponsored research agreement with Cutting Edge Surgical, Inc. in 2017, with additional funding support from NSF CAREER Award ECCS 1751522.

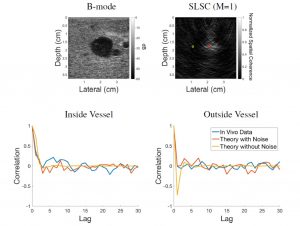

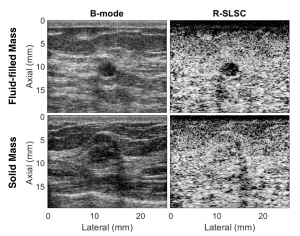

Coherence-Based Breast Imaging

Ultrasound is frequently used in conjunction with mammography in order to detect breast cancer as early as possible. However, due largely to the heterogeneity of breast tissue, ultrasound images are plagued with clutter that obstructs important diagnostic features. Short-lag spatial coherence (SLSC) imaging has proven to be effective at clutter reduction in noisy ultrasound images. M-Weighted SLSC and Robust SLSC (R-SLSC) imaging were recently introduced by our lab to further improve image quality at higher lag values, while R-SLSC imaging has the added benefit of enabling the adjustment of tissue texture to produce a tissue SNR that is quantitatively similar to B-mode speckle SNR. This work holds promise for using SLSC, M-Weighted SLSC, and/or R-SLSC imaging to distinguish between fluid-filled and solid hypoechoic breast masses, which has implications for improved breast cancer detection and screening, more streamlined diagnostic work ups, and reduced patient anxiety over suspicious breast mass findings.

Ultrasound is frequently used in conjunction with mammography in order to detect breast cancer as early as possible. However, due largely to the heterogeneity of breast tissue, ultrasound images are plagued with clutter that obstructs important diagnostic features. Short-lag spatial coherence (SLSC) imaging has proven to be effective at clutter reduction in noisy ultrasound images. M-Weighted SLSC and Robust SLSC (R-SLSC) imaging were recently introduced by our lab to further improve image quality at higher lag values, while R-SLSC imaging has the added benefit of enabling the adjustment of tissue texture to produce a tissue SNR that is quantitatively similar to B-mode speckle SNR. This work holds promise for using SLSC, M-Weighted SLSC, and/or R-SLSC imaging to distinguish between fluid-filled and solid hypoechoic breast masses, which has implications for improved breast cancer detection and screening, more streamlined diagnostic work ups, and reduced patient anxiety over suspicious breast mass findings.

Related Publications:

- Wiacek A, Oluyemi E, Myers K, Mullen L, Bell MAL, Coherence-based beamforming increases the diagnostic certainty of distinguishing fluid from solid masses in breast ultrasound exams, Ultrasound in Medicine and Biology, 46(6):1380-1394, 2020 [pdf]

- A Wiacek, OMH Rindal, E Falomo, K Myers, K Fabrega-Foster, S Harvey, MAL Bell, Robust Short-Lag Spatial Coherence Imaging of Breast Ultrasound Data: Initial Clinical Results, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 66(3):527-540, 2019 [pdf]

- Wiacek A, Myers K, Falomo E, Rindal OMH, Fabrega-Foster K, Harvey S, Bell MAL, Clinical feasibility of coherence-based beamforming to distinguish solid from fluid hypoechoic breast masses, Proceedings of the 2018 IEEE International Ultrasonics Symposium, Kobe, Japan, October 22-25, 2018 [pdf]

- AA Nair, T Tran, MAL Bell, Robust Short-Lag Spatial Coherence Imaging, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 65(3):366-377, 2018 [pdf]

This project is funded by NIH R01 EB032960.

Enhancing Needle Visualization & Navigation in Challenging Acoustic Environments

Percutaneous needle interventions are a necessity for a multitude of tasks, such as ultrasound guided biopsies or cyst aspirations. Despite its widespread utility, simplicity, safety, and cost-efficiency, ultrasound imaging of needles can be challenging in the presence of acoustic clutter. Therefore, we have introduced multiple approaches over the years to improve needle and needle tip visualization using ultrasound-based technology. These new approaches take advantage of advances in beamforming, signal processing, robotic integration, and photoacoustic principles.

Percutaneous needle interventions are a necessity for a multitude of tasks, such as ultrasound guided biopsies or cyst aspirations. Despite its widespread utility, simplicity, safety, and cost-efficiency, ultrasound imaging of needles can be challenging in the presence of acoustic clutter. Therefore, we have introduced multiple approaches over the years to improve needle and needle tip visualization using ultrasound-based technology. These new approaches take advantage of advances in beamforming, signal processing, robotic integration, and photoacoustic principles.

Related publications:

- Matrone G, Bell MAL, Ramalli A, Spatial Coherence Beamforming with Multi-Line Transmission to Enhance the Contrast of Coherent Structures in Ultrasound Images Degraded by Acoustic Clutter, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 68(12):3570-3582, 2021 [pdf] [International Collaboration]

- Gubbi MR, Bell MAL, Deep Learning-Based Photoacoustic Visual Servoing: Using Outputs from Raw Sensor Data as Inputs to a Robot Controller, IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, May 30 – June 5, 2021 [pdf]

- Bell MAL, Shubert J, Photoacoustic-based visual servoing of a needle tip, Scientific Reports, 8:15519, 2018 [pdf]

- Allman D, Reiter A, Bell MAL, Photoacoustic source detection and reflection artifact removal enabled by deep learning, IEEE Transactions on Medical Imaging, 37(6):1464-1477, 2018 [pdf | datasets | code]

- J Shubert, MAL Bell, Photoacoustic Based Visual Servoing of Needle Tips to Improve Biopsy on Obese Patients, Proceedings of the 2017 IEEE International Ultrasonics Symposium, Washington, DC, September 6-9, 2017 [pdf]

Reducing Acoustic Clutter in Echocardiography with SLSC Beamforming

Clutter is a problematic noise artifact in a variety of ultrasound applications. Clinical tasks complicated by the presence of clutter include detecting cancerous lesions in abdominal organs (e.g. livers, bladders) and visualizing endocardial borders to assess cardiovascular health. Short-Lag Spatial Coherence (SLSC) imaging is a novel beamforming approach that calculates and displays the spatial coherence of received echoes, rather than amplitude information, to reduce acoustic clutter and improve contrast, contrast-to-noise, and signal-to-noise ratios when compared with conventional B-mode images. The video of the left ventricle of a beating heart demonstrates the benefits of SLSC over traditional B-mode imaging for a patient treated at the Duke University Medical Center. Clutter is reduced and the endocardial borders are better visualized with SLSC imaging, which has multiple implications for improved quantitative and qualitative diagnoses of cardiovascular health. It is additionally useful in any ultrasound application that suffers from large amplitude clutter noise, including cardiac, liver, fetal, vascular, breast, and interventional (e.g., needle) imaging.

Clutter is a problematic noise artifact in a variety of ultrasound applications. Clinical tasks complicated by the presence of clutter include detecting cancerous lesions in abdominal organs (e.g. livers, bladders) and visualizing endocardial borders to assess cardiovascular health. Short-Lag Spatial Coherence (SLSC) imaging is a novel beamforming approach that calculates and displays the spatial coherence of received echoes, rather than amplitude information, to reduce acoustic clutter and improve contrast, contrast-to-noise, and signal-to-noise ratios when compared with conventional B-mode images. The video of the left ventricle of a beating heart demonstrates the benefits of SLSC over traditional B-mode imaging for a patient treated at the Duke University Medical Center. Clutter is reduced and the endocardial borders are better visualized with SLSC imaging, which has multiple implications for improved quantitative and qualitative diagnoses of cardiovascular health. It is additionally useful in any ultrasound application that suffers from large amplitude clutter noise, including cardiac, liver, fetal, vascular, breast, and interventional (e.g., needle) imaging.

Related Publications:

- Bell MAL, Goswami R, Kisslo JA, Dahl JJ, Trahey GE. Short-lag spatial coherence (SLSC) imaging of cardiac ultrasound data: Initial clinical results, Ultrasound in Medicine and Biology, 39(10):1861–74. 2013. [pdf]

- Bell MAL, Goswami R, Dahl JJ, Trahey GE. Improved Visualization of Endocardial Borders with Short-Lag Spatial Coherence (SLSC) Imaging of Fundamental and Harmonic Ultrasound Data, Proceedings of the 2012 IEEE International Ultrasonics Symposium, Dresden, Germany, October 7-10, 2012. [full text]

- Bell MAL, Dahl JJ, Trahey GE. Resolution and brightness characteristics of short-lag spatial coherence images, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 62(7):1265-1267, 2015. [featured on cover | full text]

- Lediju MA, Trahey GE, Byram BC, Dahl JJ. Spatial coherence of backscattered echoes: Imaging characteristics, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 58(7):1377-88. 2011. [full text]

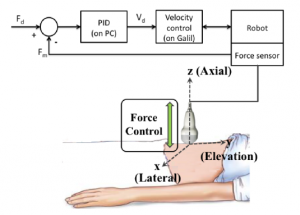

Force-Controlled Robot to Standardize Tissue Elasticity Measurements

Acoustic radiation force (ARF)-based elasticity imaging enables remote visualization and quantification of tissue stiffness. Achieved by transmitting focused high-energy acoustic pulses to generate radiation force in tissue, the resulting ARF-based tissue displacements are tracked using ultrasound data acquired with a stationary probe. Previous work demonstrates that the quality and utility of ARF-based elasticity measurements depend on the applied probe pressure when organs are located near the skin surface and, therefore, subjected to compressive forces from the ultrasound probe. We are investigating the use of robots to control the applied force for ARF-based elasticity measurements in three primary clinical scenarios: (1) longitudinal studies of the same patient, (2) comparative studies between patients, and (3) large-scale 3-D elasticity imaging. Potential clinical applications include imaging of the breast, skin, and left liver lobe.

Acoustic radiation force (ARF)-based elasticity imaging enables remote visualization and quantification of tissue stiffness. Achieved by transmitting focused high-energy acoustic pulses to generate radiation force in tissue, the resulting ARF-based tissue displacements are tracked using ultrasound data acquired with a stationary probe. Previous work demonstrates that the quality and utility of ARF-based elasticity measurements depend on the applied probe pressure when organs are located near the skin surface and, therefore, subjected to compressive forces from the ultrasound probe. We are investigating the use of robots to control the applied force for ARF-based elasticity measurements in three primary clinical scenarios: (1) longitudinal studies of the same patient, (2) comparative studies between patients, and (3) large-scale 3-D elasticity imaging. Potential clinical applications include imaging of the breast, skin, and left liver lobe.

Related Publications:

- Bell MAL, Kumar S, Kuo L, Sen HT, Iordachita I, Kazanzides P. Toward standardized ultrasound-based elasticity measurements with robotic force control, IEEE Transactions on Biomedical Engineering, 63(7):1517-24. 2016. [pdf]

- Bell MAL, Sen HT, Iordachita I, Kazanzides P, Wong J. In vivo reproducibility of robotic probe placement for a novel ultrasound-guided radiation therapy system, Journal of Medical Imaging, 1(2):025001, 2014. [full text]

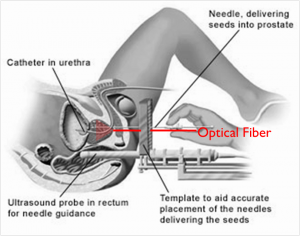

Photoacoustic Imaging of Prostate Brachytherapy Seeds

Brachytherapy is an increasingly popular treatment option for prostate cancer, administered by implanting tiny, metallic, radioactive seeds in the prostate. The seeds are currently visualized with ultrasound imaging during implantation, but they are sometimes difficult to locate due to factors like their poor acoustic contrast with the surrounding environment. Photoacoustic imaging, a method based on light emission, optical absorption, and the subsequent generation of sound waves, has promise as an alternative imaging method, as the optical absorption of metal is orders of magnitude larger than that of tissue and blood, resulting in significant photoacoustic contrast between brachytherapy seeds and the surrounding environment.

Brachytherapy is an increasingly popular treatment option for prostate cancer, administered by implanting tiny, metallic, radioactive seeds in the prostate. The seeds are currently visualized with ultrasound imaging during implantation, but they are sometimes difficult to locate due to factors like their poor acoustic contrast with the surrounding environment. Photoacoustic imaging, a method based on light emission, optical absorption, and the subsequent generation of sound waves, has promise as an alternative imaging method, as the optical absorption of metal is orders of magnitude larger than that of tissue and blood, resulting in significant photoacoustic contrast between brachytherapy seeds and the surrounding environment.

Related Publications:

- Bell MAL, Guo X, Song DY, Boctor EM. Transurethral light delivery for prostate photoacoustic imaging, Journal of Biomedical Optics, 20(3):036002, 2015. [full text]

- Bell MAL, Kuo N, Song DY, Kang J, Boctor EM. In vivo visualization of prostate brachytherapy seeds with photoacoustic imaging, Journal of Biomedical Optics, 19(12):126011, 2014. [full text]