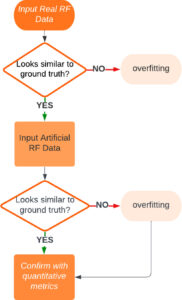

Overfitting Detection Method for DNN Beamformers

We designed a novel and innovative method that uses artificial channel data to rapidly detect overfitting of DNNs trained to output beamformed images. Primary benefits include no time-consuming retraining process, training code, training data, nor collection

of additional test data needed. There are multiple potential applications, such as quick sanity checks, public policy development, regulation creation, safeguarding against security breaches, network optimization, and benchmarking. A useful implementation is available here: https://gitlab.com/pulselab/OverfittingDetectionMethod.

If you find this code or method useful, please cite our associated papers:

- Zhang J, Bell MAL, Overfit detection method for deep neural networks trained to

beamform ultrasound images, Ultrasonics, 148:107562, 2025 [pdf] - Zhang J, Wiacek A, Bell MAL, Binary and Random Inputs to Rapidly Identify Overfitting of Deep Neural Networks Trained to Output Ultrasound Images, Proceedings of the 2022 IEEE International Ultrasonics Symposium, Venice, Italy, October 10-13, 2022 [pdf]

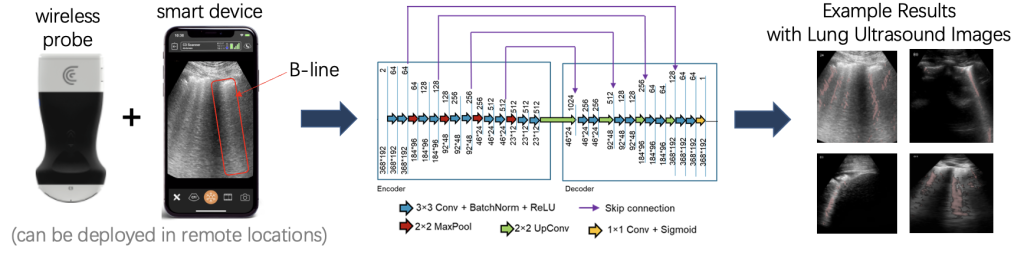

Simulated and Labeled In Vivo COVID-19 Dataset

We offer public access (https://gitlab.com/pulselab/covid19) to the datasets and code described in the paper “Detection of COVID-19 features in lung ultrasound images using deep neural networks.” Communications Medicine, 2024. https://www.nature.com/articles/s43856-024-00463-5.

Access includes simulated B-mode images containing A-line, B-line, and consolidation features with paired ground truth segmentations, as well as our segmentation annotations of publicly available point of care ultrasound (POCUS) datasets (originating from https://github.com/jannisborn/covid19_ultrasound).

If you use our datasets and/or code, please cite the following references:

- L. Zhao, T.C. Fong, M.A.L. Bell, “Detection of COVID-19 features in lung ultrasound images using deep neural networks”, Communications Medicine, 2024. https://www.nature.com/articles/s43856-024-00463-5

- L. Zhao, M.A.L. Bell (2023). Code for the paper “Detection of COVID-19 features in lung ultrasound images using deep neural networks”. Zenodo. https://doi.org/10.5281/zenodo.10324042

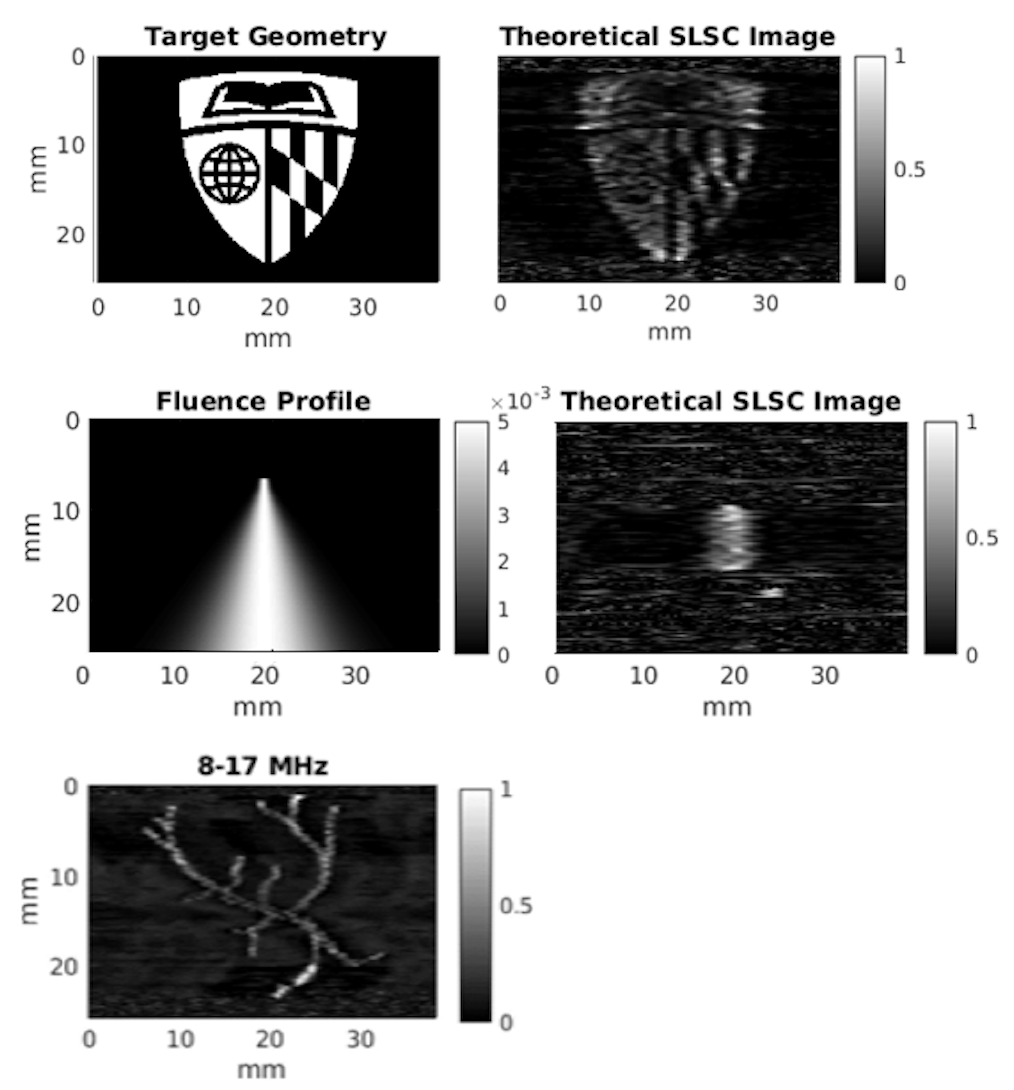

PhocoSpace

PhocoSpace is an open-source photoacoustic toolbox, created to simulate the coherence of photoacoustic signals correlated in the transducer space dimension, currently provided as a MATLAB extension. PhocoSpace is a flexible in silico tool to predict photoacoustic spatial coherence functions, determine expected photoacoustic SLSC image quality, and characterize multiple possible coherence-based photoacoustic image optimizations without requiring lengthy experimental data acquisition. In addition, this software package establishes a foundation for future investigations into alternative photoacoustic spatial coherence-based signal processing methods.

If you use this toolbox (https://gitlab.com/pulselab/phocospace), please cite the following two references:

- M.T. Graham and M.A.L. Bell, “PhocoSpace: An open-source simulation package to implement photoaocustic spatial coherence theory,” in 2022 IEEE International Ultrasonics Symposium (IUS), IEEE 2022 (accepted). (currently available as a preprint on [pdf]

- M.T. Graham and M.A.L. Bell, “Photoacoustic spatial coherence theory and applications to coherence-based image contrast and resolution,” IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, vol. 67, no. 10, pp. 2069-2084, 2020. [pdf]

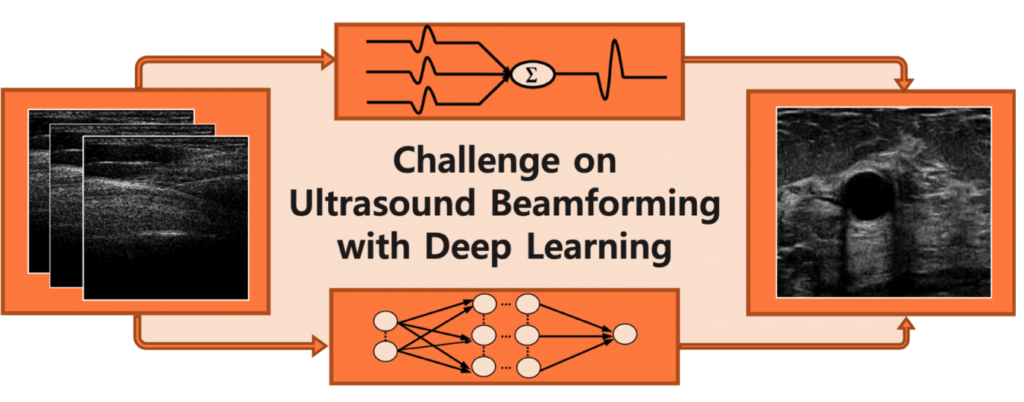

CUBDL Resources

The Challenge on Ultrasound Beamforming with Deep Learning (CUBDL) was offered as a component of the 2020 IEEE International Ultrasonics Symposium. Prof. Bell was the primary organizer of this challenge, which resulted in the following CUBDL-related resources:

Additional details about CUBDL-related resources are available in the following three citations required for use of these resources:

- MAL Bell, J Huang, D Hyun, YC Eldar, R van Sloun, M Mischi, “Challenge on Ultrasound Beamforming with Deep Learning (CUBDL)”, Proceedings of the 2020 IEEE International Ultrasonics Symposium, 2020 [pdf]

- Muyinatu A. Lediju Bell, Jiaqi Huang, Alycen Wiacek, Ping Gong, Shigao Chen, Alessandro Ramalli, Piero Tortoli, Ben Luijten, Massimo Mischi, Ole Marius Hoel Rindal, Vincent Perrot , Hervé Liebgott, Xi Zhang, Jianwen Luo, Eniola Oluyemi, Emily Ambinder, “Challenge on Ultrasound Beamforming with Deep Learning (CUBDL) Datasets”, IEEE DataPort, 2019 [Online]. Available: http://dx.doi.org/10.21227/f0hn-8f92

- D. Hyun, A. Wiacek, S. Goudarzi, S. Rothlübbers, A. Asif, K. Eickel, Y. C. Eldar, J. Huang, M. Mischi, H. Rivaz, D. Sinden, R.J.G. van Sloun, H. Strohm, M. A. L. Bell, Deep Learning for Ultrasound Image Formation: CUBDL Evaluation Framework & Open Datasets, IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, 68(12):3466-3483, 2021 [pdf]

Ultrasound Toolbox

The UltraSound Toolbox (USTB) is a free MATLAB toolbox for processing ultrasonic signals. The primary purpose of the USTB is to facilitate the comparison of imaging techniques and the dissemination of research results. The PULSE Lab is proud to collaborate on this effort to deliver SLSC beamforming to the broader ultrasound community (http://www.ustb.no/examples/advanced-beamforming/short-lag-spatial-coherence-slsc/), as well as heart and phantom datasets, and the SLSC beamforming code, which are all freely available to use. Additional datasets and beamforming code can be found by perusing the USTB website.

The UltraSound Toolbox (USTB) is a free MATLAB toolbox for processing ultrasonic signals. The primary purpose of the USTB is to facilitate the comparison of imaging techniques and the dissemination of research results. The PULSE Lab is proud to collaborate on this effort to deliver SLSC beamforming to the broader ultrasound community (http://www.ustb.no/examples/advanced-beamforming/short-lag-spatial-coherence-slsc/), as well as heart and phantom datasets, and the SLSC beamforming code, which are all freely available to use. Additional datasets and beamforming code can be found by perusing the USTB website.

Photoacoustic Deep Learning Datasets and Code

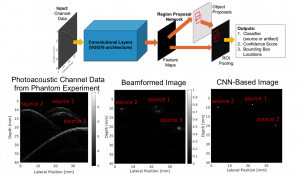

Our lab is pioneering the application of deep learning to bypass traditional beamforming steps and use raw channel data to directly display specific features of interest in ultrasound and photoacoustic images. We train with simulated data and transfer the networks to experimental data. Our trained deep neural networks, experimental datasets, and instructions for use are freely available in order to foster reproducibility and future comparisons with our associated publications on this topic.

Our lab is pioneering the application of deep learning to bypass traditional beamforming steps and use raw channel data to directly display specific features of interest in ultrasound and photoacoustic images. We train with simulated data and transfer the networks to experimental data. Our trained deep neural networks, experimental datasets, and instructions for use are freely available in order to foster reproducibility and future comparisons with our associated publications on this topic.

Code: https://github.com/derekallman/Photoacoustic-FasterRCNN

Publication: https://ieeexplore.ieee.org/document/8345287

If you use these datasets or code, please cite:

- D Allman, A Reiter, MAL Bell, Photoacoustic source detection and reflection artifact removal enabled by deep learning, IEEE Transactions on Medical Imaging, 37(6):1464-1477, 2018 [pdf]

- D Allman, A Reiter, MAL Bell, Photoacoustic Source Detection and Reflection Artifact Deep Learning Dataset, IEEE Dataport, 2018. [Online]. Available: http://dx.doi.org/10.21227/H2ZD39.