Congratulations to Derek Allman! His paper entitled “Photoacoustic Source Detection and Reflection Artifact Removal Enabled by Deep Learning” was accepted to the IEEE Transactions on Medical Imaging. This paper is expected to appear in the Special Issue on Machine Learning for Image Reconstruction.

Congratulations to Derek Allman! His paper entitled “Photoacoustic Source Detection and Reflection Artifact Removal Enabled by Deep Learning” was accepted to the IEEE Transactions on Medical Imaging. This paper is expected to appear in the Special Issue on Machine Learning for Image Reconstruction.

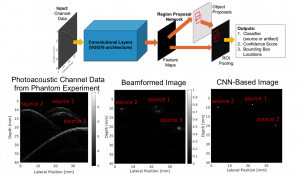

This work is the first to use deep convolutional neural networks (CNNs) as an alternative to the photoacoustic beamforming and image reconstruction process. We used simulations to train CNNs to identify sources and reflection artifacts in raw photoacoustic channel data, reformatted the network outputs to usable images that we call CNN-Based images, and transferred these trained networks to operate on experimental data. Multiple parameters were varied during training (e.g., channel noise, number of sources, number of artifacts, sound speed, signal amplitude, transducer model, lateral and axial locations of sources and artifacts, and spacing between sources and artifacts). The classification accuracy of simulation and experimental data ranged from 96-100% when the channel signal-to-noise ratio was -9 dB or greater and when sources were located in trained locations. Over 99% of the results had submillimeter location accuracy. Our CNN-Based images have high contrast, no artifacts, and resolution that rivals the traditional photoacoustic image resolution of low-frequency ultrasound probes.

Citation: D Allman, A Reiter, MAL Bell, Photoacoustic Source Detection and Reflection Artifact Removal Enabled by Deep Learning, IEEE Transactions on Medical Imaging, 37(6):1464-1477, 2018 [pdf | datasets | code]